Re-Introducing a Smart Way to Edit Notes

Overview

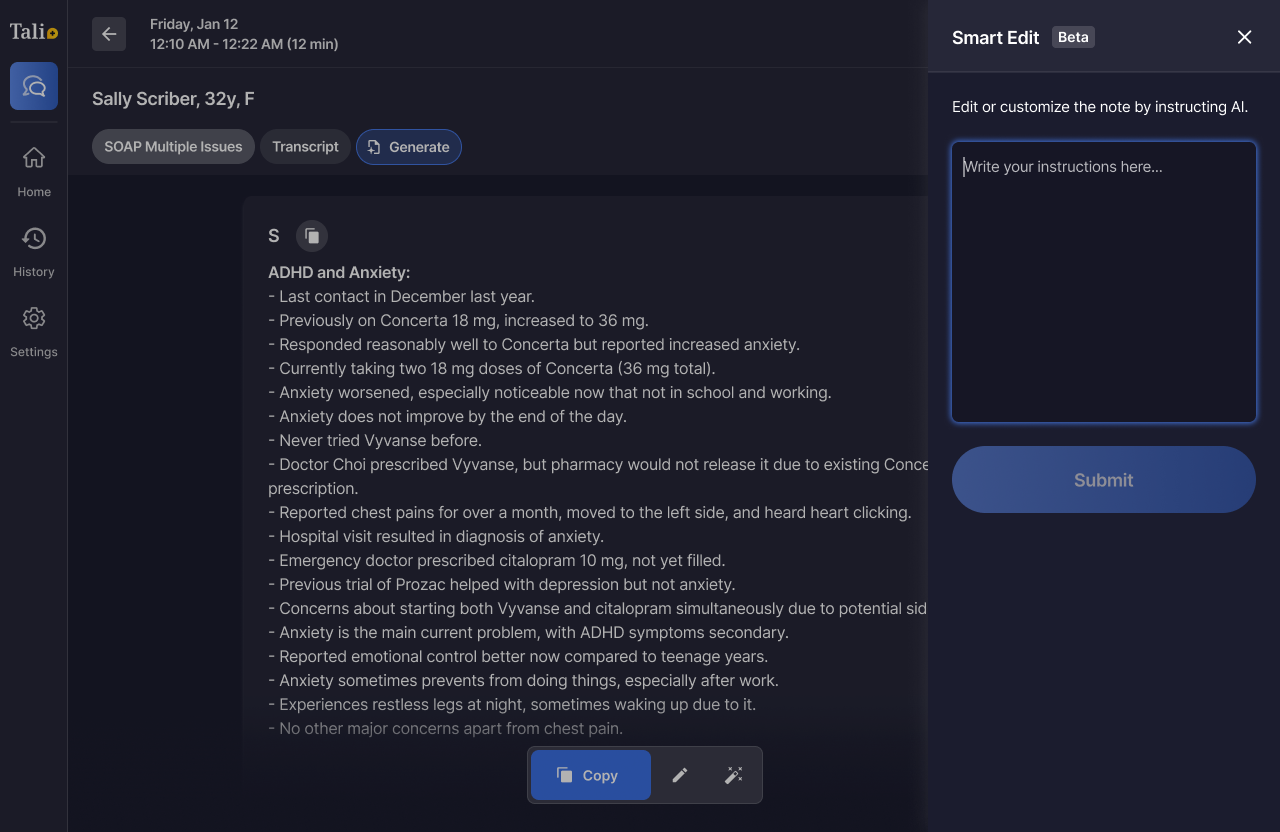

‘Smart Edit’ is a note editing feature that allows physicians to edit and customize their notes by prompting AI.

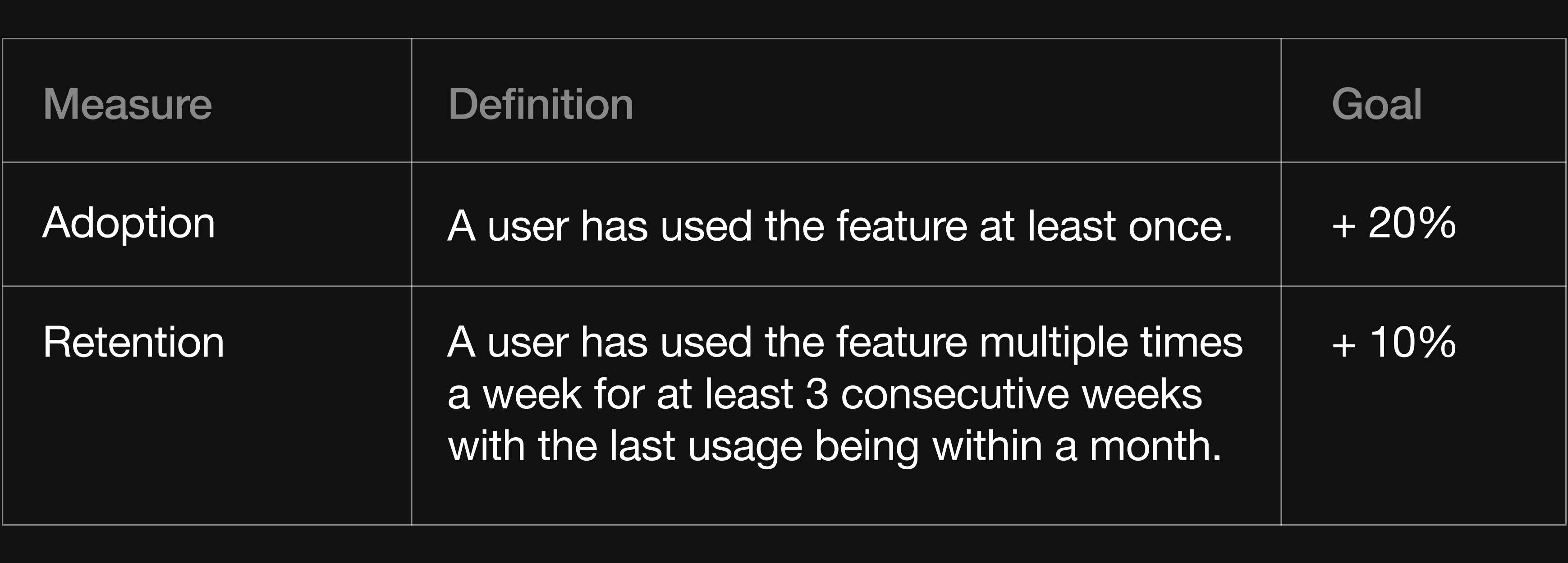

I collaborated with the Product Team to enhance the design of ‘Smart Edit’ with the goal of improving the feature adoption by 20% and retention rate by 10% in two months.

Role

Designer

Design Timeline

2-weeks

Tools

Figma, Linear

The Problem

Following Smart Edit’s beta release, the feature’s usage stats were seen to be underwhelming. Only 9% of users had tried the feature, while only half of those who tried it used it more than once.

Adoption rate: ~9%

Retention rate: ~4%

(The 'Smart Edit' MVP with underwhelming user engagement)

Why usage rates were projected to be higher:

We had a lot evidence revealing that almost all generated notes were manually edited by physicians to some extent (either within Tali or their Electronic Medical Records (EMR)).

The most common edits include:

1. Removing or replacing all instances of a specific word

2. Adjusting the structure of a section or adding sub-sections

3. Changing the patient’s pronouns

After analyzing the types of edits physicians made to their notes, it was clear that in most cases AI could effectively edit the notes on behalf of the physician. For physicians, this in theory would reduce editing time and even allow for physicians to quickly customize specific notes.

Goals and Objectives

Increase feature adoption by 20% and retention by 10%

These goals were set by the product team taking into account the current state of the note generation and how users are editing their notes.

Business Value:

From a business perspective, ‘Smart Edit’ is a strategic feature as it allows the product team to gain further insights into how physicians interact with AI and what an ideal note is to them, therefore engagement with ‘Smart Edit’ is highly valuable. With the information acquired through usage of the the feature, the team can use it to develop the AI-model, tailor communication towards users and even inform feature roadmaps (e.g. what type of note settings to develop as user preferences).

Key Considerations:

Low ‘Smart Edit’ usage isn’t necessarily a bad thing. In an ideal world, the original note output is perfect and does not need to be edited. As the AI-model evolves and features are added or enhanced, it will reduce the need for ‘Smart Edit’ and therefore we need to account for this when reflecting on our usage metrics.

Research

Research methods included user interviews with paid physician advisors and analyzing note outputs/edits captured in our database.

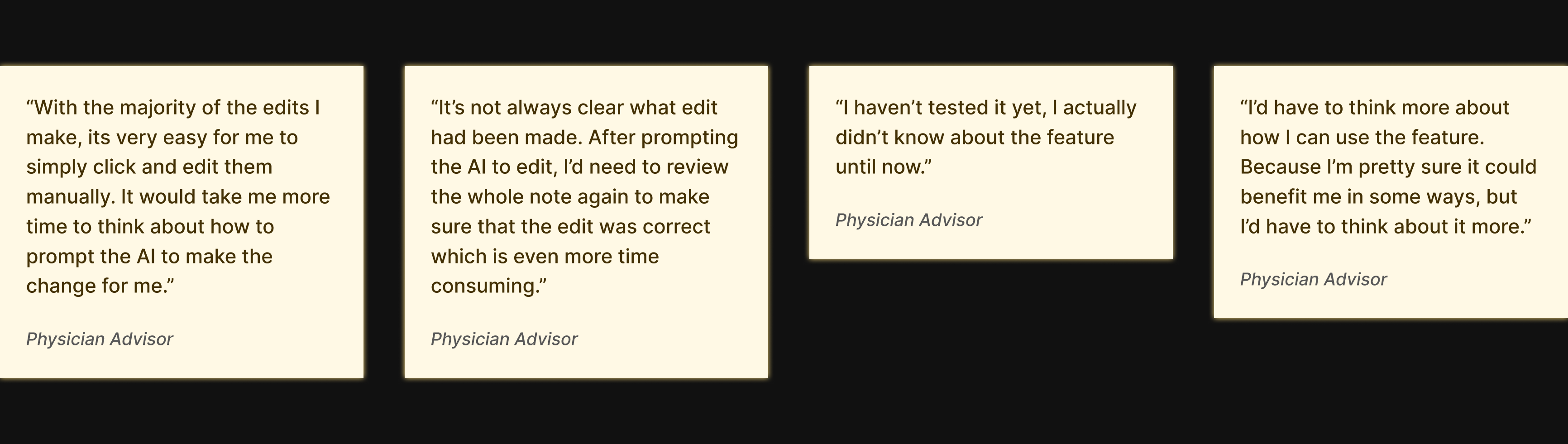

(Insights gathered during interviews with paid physician advisors)

Key Findings:

A theme in user-interviews was that users didn’t view ‘Smart Edit’ as a valuable feature; explaining that it would take them more effort to prompt AI than to make edits manually.

When observing the captured prompts in our database, it appeared that many users didn’t know how to interact with Large Language Models (LLM) or they had a very limited perspective on how the feature could be used to their benefit.

Additionally, there seemed to be a lack of awareness surrounding the feature as many users did not know it existed.

Other known insights about our users:

Users are typically very busy and do not have much cognitive load for exploring and experimenting with new features outside of their current workflow.

Reducing clicks especially around repetitive actions is important to users as it tends to save a lot of time in the long run.

Ideation

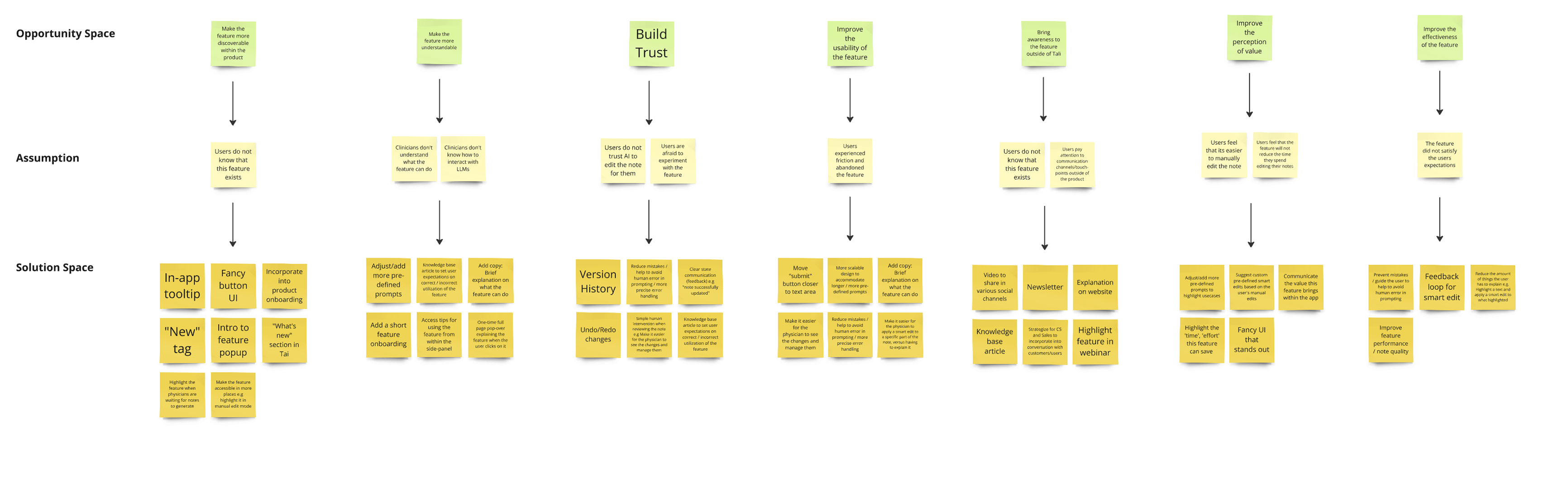

Given the insights gathered, we ran an opportunity mapping exercise with another designer. In doing so, the exercise allowed us to consider what the most fruitful opportunities for improvement were, challenge any relevant assumptions, and then diverge into ideating solutions.

(Outcomes of opportunity mapping exercise)

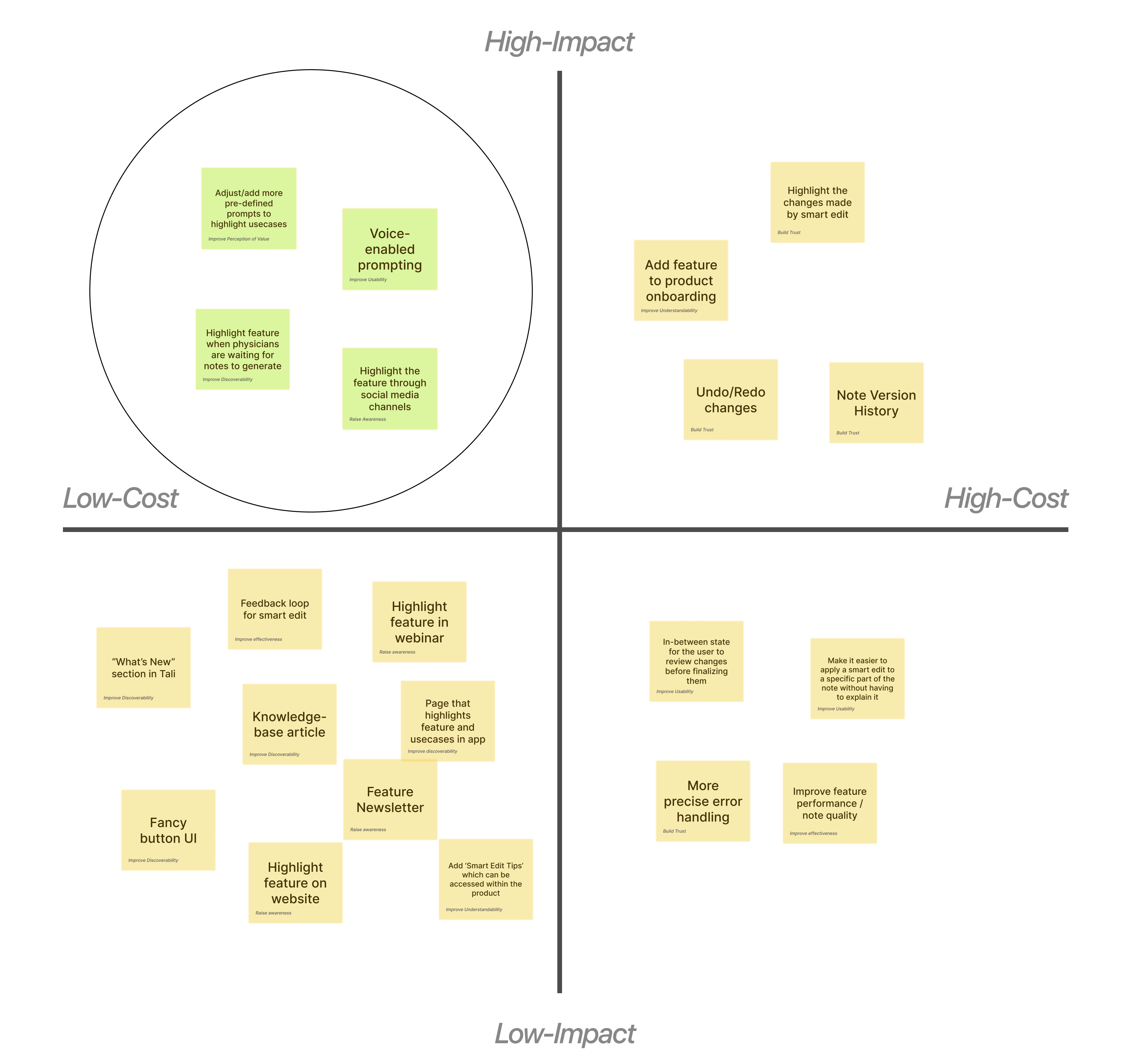

Following the opportunity mapping exercise, we ran a cost/benefit analysis to help us prioritize solutions based on cost-effectiveness within each key ‘opportunity space’.

(Outcomes of cost/benefit analysis)

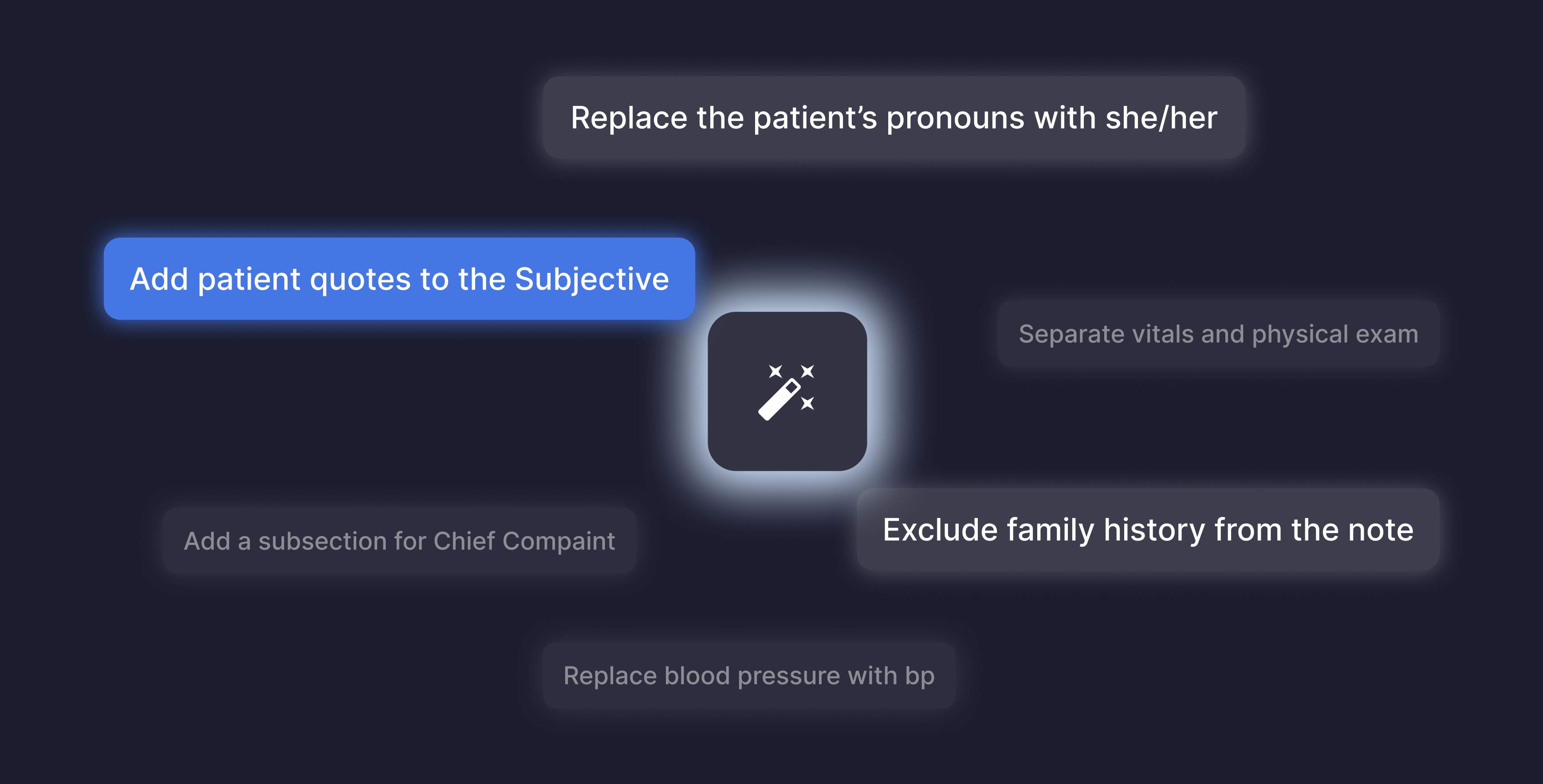

In the end, we prioritized subtle, yet cost-effective solutions which would increase:

1. The perception of value and understandability

By adding carefully selected pre-defined prompts to highlight certain use-cases that the feature can help with.

2. Usability of the feature

By allowing users to prompt using their voice or quickly access recent prompts with a single click.

3. Discoverablility of the feature within the product

Through a tool-tip which is strategically disclosed to the user while a note is being generated.

4. Awareness to the feature outside of the product

Through newsletters, social media channels and webinar.

The Solution

Collectively the ‘Smart Edit’ enhancements followed a multi-faceted approach to improve awareness and retention metrics.

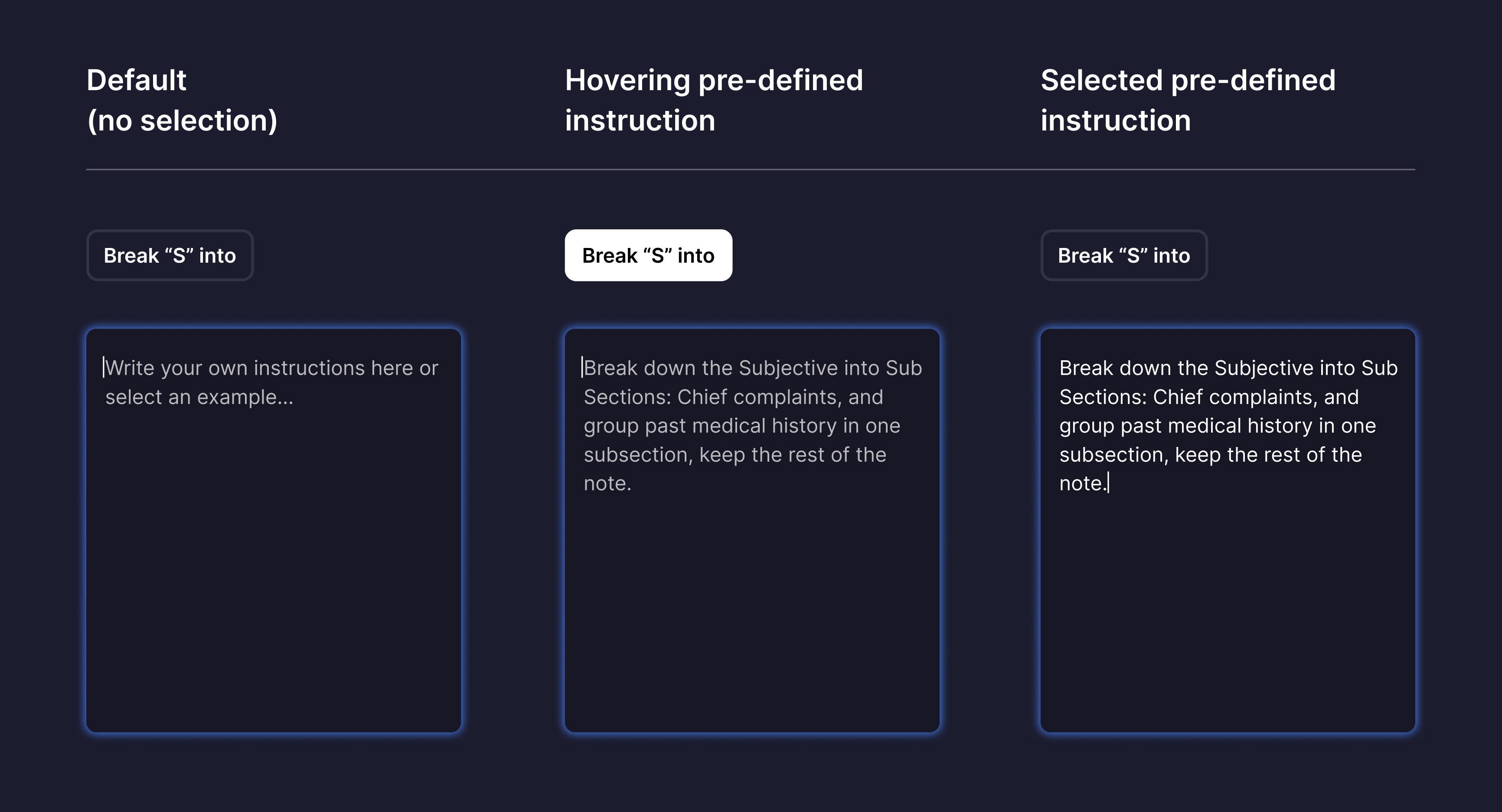

Since the 'Smart Edit' feature was located in a side panel, space constraints significantly influenced the design. As a result, we had to consider an efficient way to showcase a series of pre-defined prompts without overwhelming the user with text.

(Pre-defined prompt behaviour on text area)

Our pre-defined prompts were chosen strategically. After observing a significant number of prompts and working with our physician advisors, we choose a few which would be insightful to users, highlighting key use-cases and sparking curiosity while also being highly practical.

For the first time, users could now do things like restructure their note with a single prompt. Or add patient quotes to the Subjective with minimal effort.

Our data showed that users will often make the same type of edits accross multiple notes and therefore we personalized the ‘Smart Edit’ experience by allowing users to quickly access their recent editing prompts

(Easily access the most recent prompt)

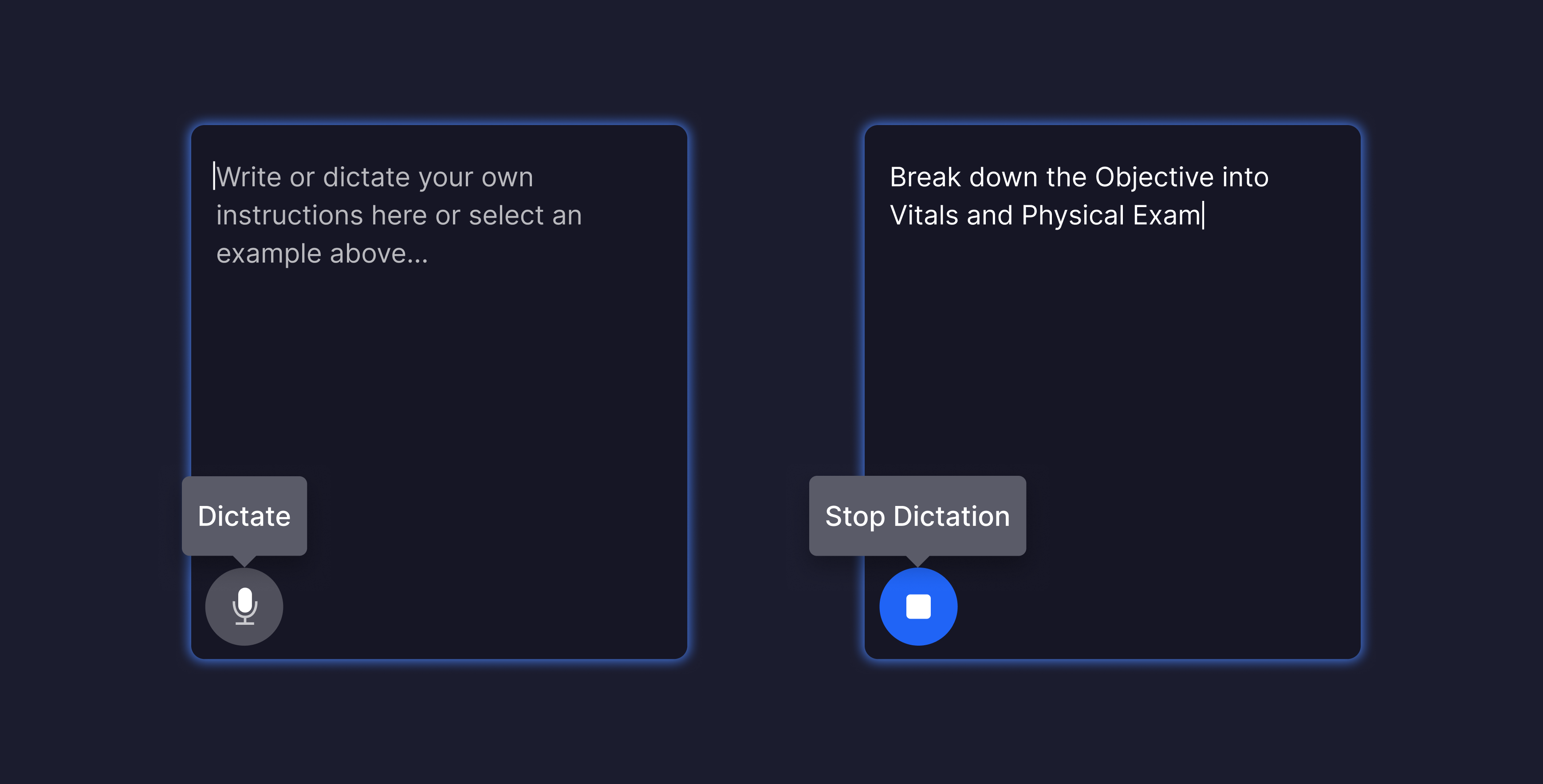

During our user-testing of the beta release, something interesting that stood out to us was that many users interpreted ‘Smart Edit’ as a voice-enabled feature (which it wasn’t). Some even attempting to speak their prompt as soon as the side-panel was open. Other users also indicated that using voice rather than typing was easier and they perceived it as less time-consuming. As a result we added in a voice feature which would allow users to dictate their editing prompts.

(Use voice to dictate edits)

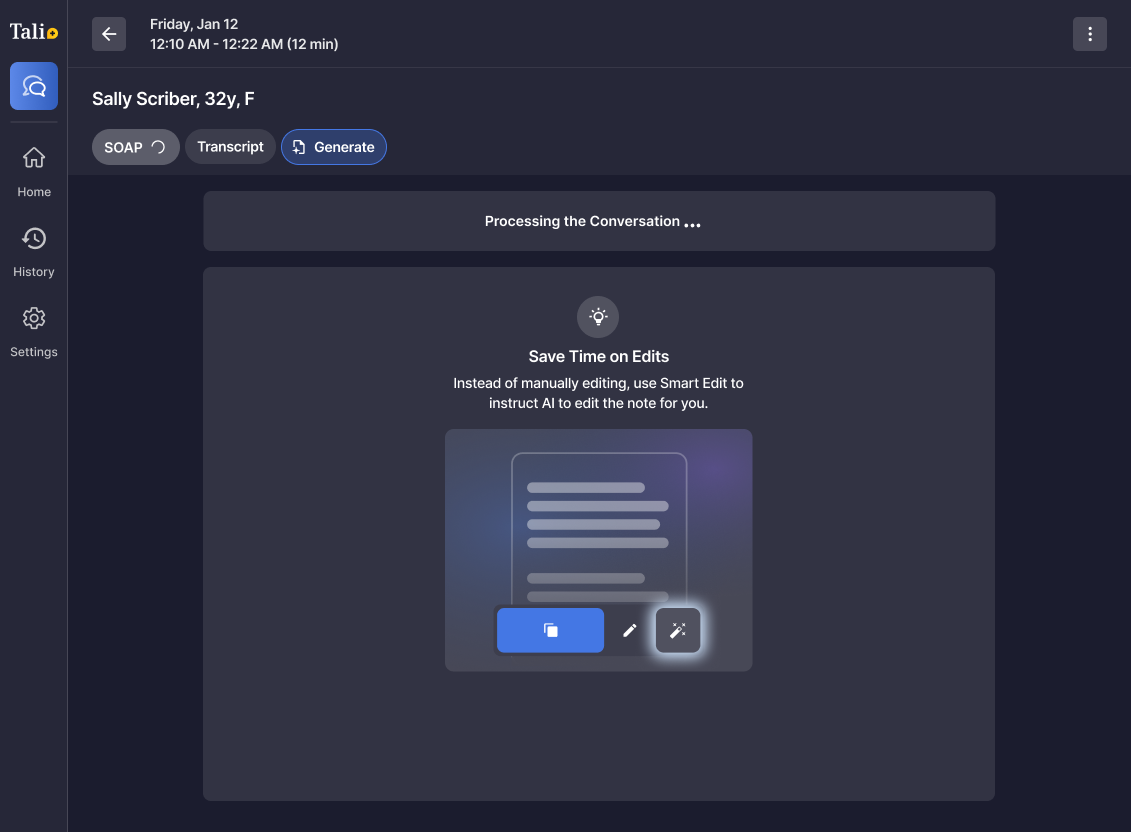

In order to spark curiosity and ultimately increase adoption of ‘Smart Edit’, we highlighted the feature using a strategic tool-tip that would appear while a note is being generated. Notes typically take 10-15 seconds to generate and we viewed this as a good opportunity to disclose the tool-tip without intruding on the user experience, especially since the next step for many users is to review and make final edits to the note. With a few added conditions, we tailored the visibility of this tool-tip so that it would not be overly redundant.

(Strategic tool-tip that appears while a note is generating)

Results

Shortly after shipping the feature upgrades and launching an awareness campaign we experienced an increase in adoption and retention.

Another thing we noticed is that users were now submitting higher-quality prompts and there was a significant reduction in errors which signalled that users are gaining a better understanding on how to interact with AI. Not only that, but we also saw a much wider variety of prompts, many of them being a lot more detailed and highlighting new use-cases which were once unknown to the team.

The benefits that Smart Edit has brought users has been beneficial to the business as well. The increased curiosity and engagement with Smart Edit’s Large Language Model (LLM) has resulted in valuable user insights to inform feature roadmaps, tailor communication with users and improve note quality outputs.

Learnings and Reflection

Small changes can make a big difference. Improving engagement doesn't always have to be about reinventing the feature, sometimes it's about fine-tuning what’s already there. The small, cost-effective and user-informed enhancements helped us to greatly improve usage metrics in a short period of time.

Even though almost all notes are edited, many users will prefer to edit their notes manually and will only resort to Smart Edit for certain use cases or at certain points in their workflow (and that is totally fine!). Not every feature needs to serve every user. Empowering users to work in the way that suits them best often leads to higher satisfaction and long-term retention with the overall product.

How we can continue to improve:

There is still room to improve the personalization of this feature. Although the chosen pre-defined prompts can be great for sparking curiosity and clarifying how the tool can be used, they may not be useful to all users. Personalizing the pre-defined prompts based on the physician’s specialization, note template, or even editing patterns can be a great way to tailor the feature without demanding more effort from the user.

Building trust is a overarching theme when dealing with AI-driven features and its an area where ‘Smart Edit’ can continue to evolve. Providing a clear note version history and a prominent undo function would give the user a stronger sense of control. Additionally, highlighting the generated edits would help users quickly identify what has changed, making it easier to review the note and increase confidence in the feature.